Large Language Model Llama2 as your own “ChatGPT”

At this point, we are all part of the AI hype, and everybody knows what Chatbots are. The most used models are currently GPT-4, Bard, BLOOM, and Ernie 3.0 Titan, among others. These models can be resource-intensive, requiring an internet connection or cloud resources.

Follow this guide and learn how to transform your Large Language Model Llama2 into your own “ChatGPT”.

What is GPT 4?

GPT-4, an acronym for the ‘Generative Pre-trained Transformer 4’, is a state-of-the-art language processing AI developed by OpenAI. Building on the success of its predecessors, GPT-4 boasts an even larger neural network architecture, enabling it to generate human-like text with unprecedented accuracy and coherence.

Its vast training data, which spans a wide array of internet text up until 2021, empowers it to answer questions, write essays, create content, and even engage in real-time conversations. The model’s adaptability and versatility have positioned it at the forefront of AI-driven content generation, making it a pivotal tool in various sectors, from content creation and customer service to research and education.

Disclaimer!

Llama2 models are massive and need a lot of power to be used. With a single 3080 RTX with 8 GB of RAM, I could execute the “tiny” 7B parameters chat model with complete resources and get an answer in 4 minutes. For the 13B and 70B*, it’s recommended to use multiple GPUs

If you don’t have NASA equipment, remember that this will be merely an academic exercise.

You will need a CUDA-capable GPU, enough RAM to move the LLM model, and a disk to store it.

What is LLM Llama2?

Llama 2 is a collection of pretrained and fine-tuned large language models (LLMs) developed by Meta AI. These models range in scale from 7 billion to 70 billion parameters. The fine-tuned LLMs, called Llama 2-Chat, are optimized for dialogue use cases. Llama 2 is the successor to LLaMA (henceforth “Llama 1”) and was trained on more data, has double the context length, and was tuned on a large dataset of human preferences to ensure helpfulness and safety. It is designed to enable developers and organizations to build generative AI-powered tools and experiences.

Getting Access to LLama2

Before using LLama2, we must sign in to Meta’s waiting list. Fill in the form, and in 1 or 2 days, you will receive a link to download the model.

How to Access and Use Llama2?

- Interact with the Chatbot Demo: The easiest way to use Llama 2 is to visit llama2.ai, a chatbot model demo hosted by Andreessen Horowitz. You can ask the model questions on any topic you are interested in, or request creative content by using specific prompts

- Download the Llama 2 Code: If you want to run Llama 2 on your own machine or modify the code, you can download it from Meta AI

- Access through Microsoft Azure: Another option to access Llama 2 is through Microsoft Azure, a cloud computing service that offers various AI solutions. You can find Llama 2 on the Azure AI model catalog, where you can browse, deploy and manage AI models. You will need an Azure account and subscription to use this service

Do the Setup

Once you receive the email, clone the repository locally.

git clone https://github.com/facebookresearch/llama.git

You will need to download the model using the script download.sh (I assume you are using Linux or have WSL already installed)

chmod +x download.sh

bash download.sh

Tip: In my case the script failed because Windows format all ends of lines to \r\n but bash needs them to be only \n in linux format. You can use a text editor as Notepad++ o VSC and just replace the \r\n.

You will be prompted to paste the URL you received on the email and then select which models to download. You have 48 hours to use the link, or you will need to request a new one through the form.

Once you have the model stored, continue with the setup.

Dependencies

I recommend you install Conda, a package manager with mountains of libraries ready to use without the fuzz of installing dependencies one by one.

With Conda installed, go to the project folder and install the dependencies.

pip install -e .

In my case, I installed a few other things to make my system run CUDA, the Nvidia programming interface, which allows code to run on the GPU.

conda install cuda -c nvidia/label/cuda-11.8.0

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

You are ready to go! Or almost there if you are running this on Windows. The generator uses a library not fully supported on Windows called nccl. You need to change it to gloo in line 62 of llama/generation.py

torch.distributed.init_process_group("gloo")

Let’s Use It!

You can run your questions by editing the script on example_chat_completion.py but for now, you can test it using the default values to see if it runs

python -m torch.distributed.run /

--nproc_per_node 2 example_chat_completion.py/

--ckpt_dir .\llama-2-13b-chat\/

--tokenizer_path .\tokenizer.model/

--max_seq_len 512 /

--max_batch_size 4

After a few minutes, you will directly see your local model’s questions and responses if everything goes well! Look what we got:

User: I am going to Paris, what should I see?

Assistant: Paris, the capital of France, is known for its stunning architecture,

art museums, historical landmarks, and romantic atmosphere.

Here are some of the top attractions to see in Paris:

The Eiffel Tower: The iconic Eiffel Tower is one of the most recognizable landmarks

in the world and offers breathtaking views of the city.

The Louvre Museum: The Louvre is one of the world's largest and most famous museums,

housing an impressive collection of art and artifacts, including the Mona Lisa.

Notre-Dame Cathedral: This beautiful cathedral is one of the most famous landmarks

in Paris and is known for its Gothic architecture and stunning stained glass windows.

These are just a few of the many attractions that Paris has to offer.

With so much to see and do, it's no wonder that Paris is one of the most popular

tourist destinations in the world.

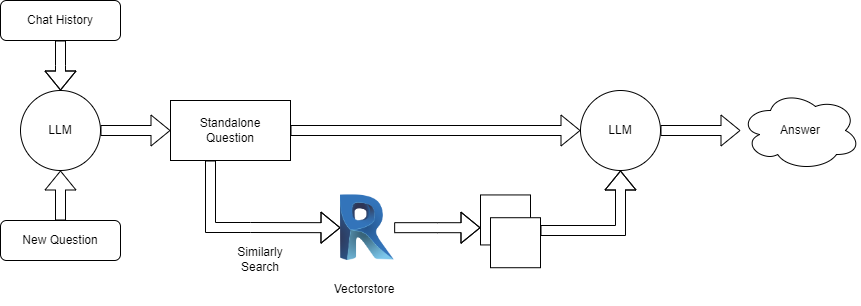

How Could We Use This to Query a Revit Model?

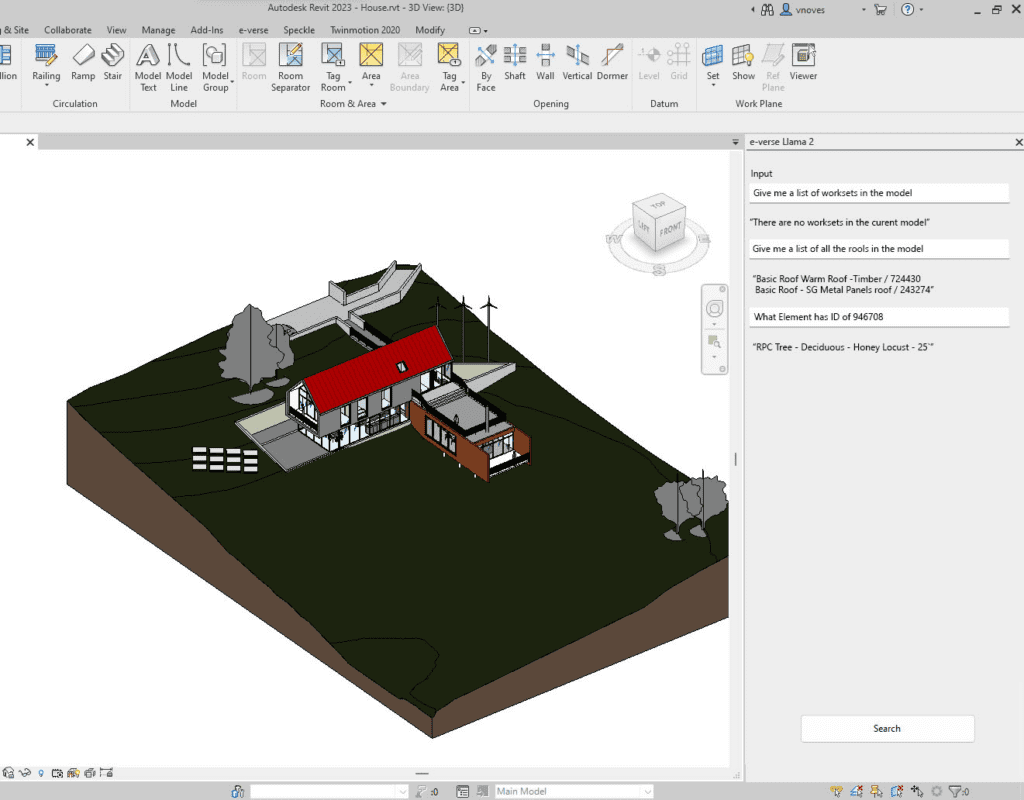

Now that we know how to use LLama2, an exciting process would be to ask questions in natural language to a Revit Model.

We first need to translate Revit file data into a Vectorstore. This could be anything from an Excel spreadsheet or a DB like SQLite or SQL.

The main challenge is not the export or the integration with LLAMA2 but dealing with large BIM models that take a lot of time to finish, So it is essential to make it right. So find some tips:

- You could trigger using the on-open event, which will get exported every time a model is opened.

public Result OnStartup(UIControlledApplication application)

{

// Register the DocumentOpened event

application.ControlledApplication.DocumentOpened += OnDocumentOpened;

return Result.Succeeded;

}

private void OnDocumentOpened(object sender, DocumentOpenedEventArgs args)

{

// This code will be run every time a document is opened

Document doc = args.Document;

TaskDialog.Show("Document Opened", "A document has been opened: " + doc.Title);

}

2.Make sure you use the IExportContext interface. This will allow us to export anything in the model the fastest

way possible.

3. Don’t export everything. We suggest you check what was exported the last time and export the new changes

to the model.

4. You can always use something like Metamorphosis project, a tool for watching changes between Revit

models

As a result, you can end up having something like the image below:

Conclusion

The most exciting aspect here is that every day new developments like LLama2 are released, and the fact that this model and others like Stable Diffusion are open source which allows unlimited possibilities.

I have no doubt that soon we will have fully developed solutions that will automate most of the BIM process and I don’t mean only the modeling and coordination process but the design and conceptual process itself, so stay tuned for future AI posts.

Pablo Derendinger

https://www.e-verse.comI'm an Architect who decided to make his life easier by coding. Curious by nature, I approach challenges armed with lateral thinking and a few humble programming skills. Love to work with passioned people and push the boundaries of the industry. Bring me your problems/Impossible is possible, but it takes more time.