Run your LLM locally: state-of-the-art 2025

Running Large Language Models Locally: The State of the Art in 2025

Large Language Models (LLMs) are transforming the way we engage with AI, driving solutions from chatbots to coding assistants.

However, a common misconception persists: leveraging AI requires sending data to the cloud, compromising privacy, and depending on external servers.

That is no longer the case. Recent advancements have made it simpler than ever to run LLMs locally on your own device.

Developers, researchers, and businesses alike can now install, operate, and fine-tune cutting-edge models—entirely offline.

This capability is particularly valuable to the AEC industry, where firms can leverage local AI to automate tasks like project documentation reviews, generate site reports, or draft contracts—all while ensuring data confidentiality and compliance with strict client agreements.

Imagine using an AI assistant without risking NDA breaches or summarizing project specifications without exposing sensitive information.

In this article, we’ll explore the best tools and models available, helping you select the right setup to integrate AI directly into your local environment.

Why Run LLMs Locally?

Before we dive into the tools and models, let’s address the why. Running AI locally comes with significant advantages:

- Privacy & Security – Your data never leaves your device. No more sending sensitive information to third-party servers.

- Speed & Responsiveness – No latency due to network requests. The AI responds instantly, even offline.

- Full Control – Customize models however you want—no restrictions imposed by cloud providers.

- Cost Savings – No API fees or cloud subscriptions. Once installed, the AI runs for free.

The Best Open-Weight LLMs You Can Run Locally

You may see hundreds of articles talking about Open-Source LLM models, but that is incorrect.

At least in the cases we checked, none of the models come with the instructions to create a similar model, but the ML learning results expressed in the weights used to handle inputs and transform them into outputs we can use.

They differ in licenses and the amount of information used to train them. **

There are many LLMs available today, each with different strengths. Below are some of the best options for local execution:

1. DeepSeek

A relatively new but highly efficient model, DeepSeek offers impressive performance at a lower computational cost.

No! Using this model locally will not expose your information to third-party countries! The model only interacts with you and your infrastructure. (But be aware, I can’t confirm the same if you run it from their website)

✅ Pros:

- Highly optimized for local execution, consuming less power than competitors.

- Cost-effective in terms of hardware requirements.

❌ Cons:

- Users reported some content is censored.

Meta’s Llama series has become one of the most popular open-weight AI models, offering strong performance and flexibility.

✅ Pros:

- Along time in the market being one of the first open-weight models, has a big community allowing deep customization.

- Excels in natural language processing (NLP) tasks.

❌ Cons:

- Demands significant computational power, making it less ideal for lower-end hardware.

- Some users find its responses overly cautious.

3. Mistral

Mistral’s models, including the 7B and 8x7B versions, balance power and efficiency, making them great for local AI.

✅ Pros:

- Strong performance in various NLP applications.

- Requires fewer resources than Llama, making it more accessible.

❌ Cons:

- Less flexibility in fine-tuning compared to Llama.

- Some users report lower creativity in responses.

4. Gemma

Developed by Google as a user-friendly open-weight model, Gemma focuses on accessibility and ease of use.

✅ Pros:

- Well-structured and natural responses.

- Google has great examples for implementations, meaning users can modify and adapt it.

❌ Cons:

- Performance varies across different tasks.

- Requires considerable computational resources.

Comparative Table of Open-Weight LLMs: DeepSeek, Llama, Mistral, and Gemma

| Model | Description | Pros | Cons |

| DeepSeek | A Chinese AI model known for high efficiency and cost-effectiveness. | – Optimized for local execution with lower power consumption. – Cost-effective hardware requirements. – Open-weight, allowing for widespread use and adaptation. | – Some content may be censored due to alignment with Chinese regulations. – Potential data privacy concerns when running on their website. |

| Llama (Llama 3.1, Llama 3.2) | Meta’s open-weight AI models with a strong community and flexibility. | – Established presence with extensive community support for customization. – Excels in natural language processing tasks. | – Requires significant computational power, less ideal for lower-end hardware. – Responses may be overly cautious. |

| Mistral | Models balancing performance and efficiency, suitable for local AI applications. | – Strong performance in various NLP applications. – Requires fewer resources than Llama, enhancing accessibility. | – Less flexibility in fine-tuning compared to Llama. – Some users report lower creativity in responses. |

| Gemma | Google’s user-friendly open-weight model focusing on accessibility. | – Produces well-structured and natural responses. – Extensive implementation examples facilitate modification and adaptation. | – Performance varies across different tasks. – Requires considerable computational resources. |

How to run LLM locally?

Great models need great tools. Here are the best platforms to run LLM on your local machine:

Note that all of these options will require you to have a powerful GPU to run the models decently. Some of the tools may allow you to run on a CPU losing some speed in the process.

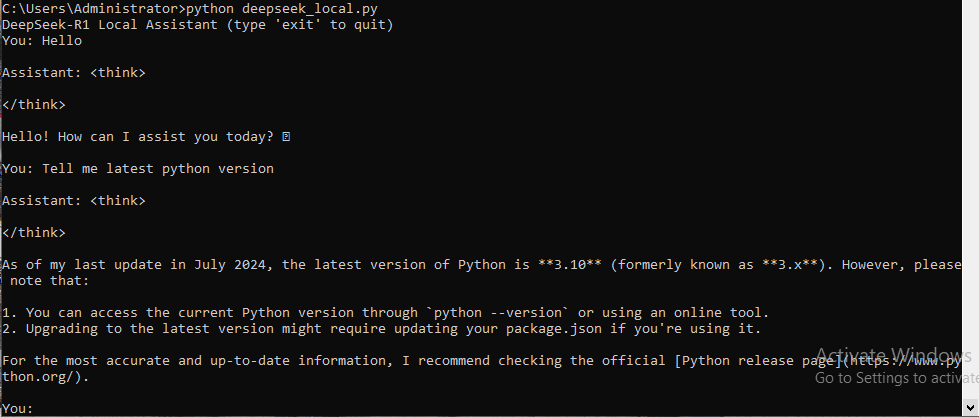

1. Ollama

A command-line-based tool that allows you to run AI models effortlessly.

✅ Pros:

- Works on Windows, macOS, and Linux.

- Supports a variety of models like DeepSeek, Llama, Phi, and Qwen.

❌ Cons:

- No graphical interface—requires using the terminal.

- Some learning curve for beginners.

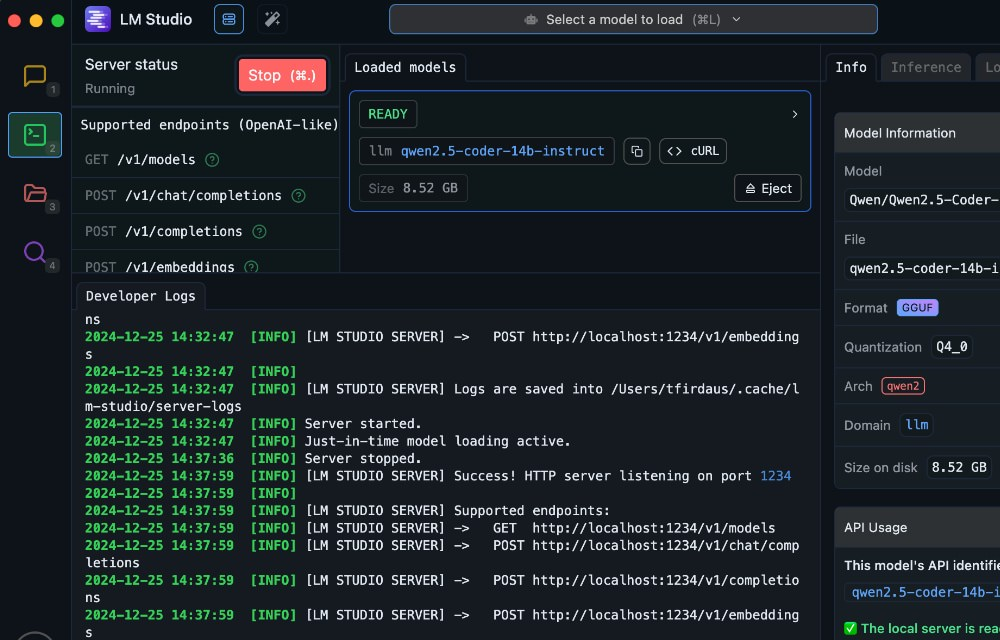

2. LM Studio

A more user-friendly option with a graphical interface.

✅ Pros:

- Easy to install and use, with a built-in model search and downloader.

- Supports multiple models including Llama, Mistral, and DeepSeek.

❌ Cons:

- Higher hardware requirements.

- Less fine-tuning capability than terminal-based options.

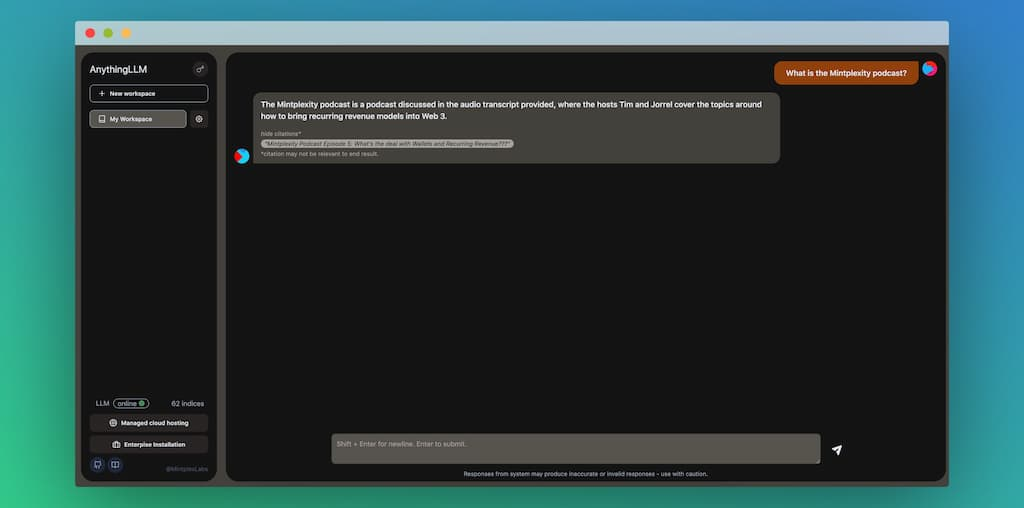

3. AnythingLLM

Designed as an all-in-one AI assistant, this tool enables local AI chat and document processing.

✅ Pros:

- Supports document-based AI chat.

- Strong focus on privacy and customization.

❌ Cons:

- Can be complex for new users due to its many features.

- Users with limited hardware may need cloud-based subscriptions for better performance.

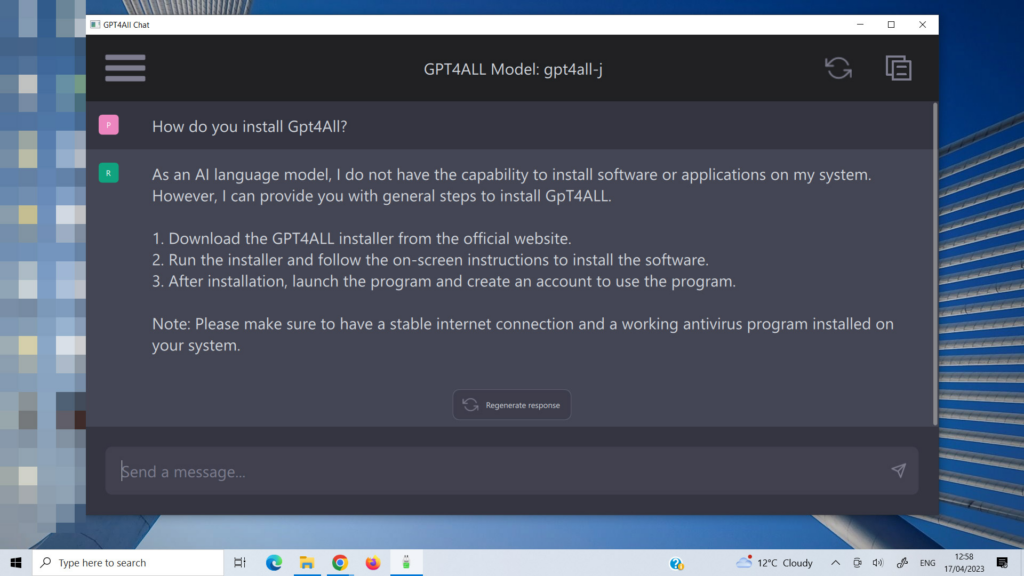

4. GPT4All

A powerful AI framework offering access to thousands of open-source models.

✅ Pros:

- Can install and run over 1,000 different models.

- Works on both CPU and GPU.

❌ Cons:

- Uses a freemium model—the free version has token limitations.

- Can be resource-intensive for advanced models.

Bringing AI to Your Machine Has Never Been Easier

The future of AI isn’t just in the cloud—it’s also on your laptop, desktop, or even a Raspberry Pi.

Thanks to open-source models and user-friendly tools, running a powerful LLM locally is no longer a challenge reserved for AI experts.

With privacy, cost savings, and total control on your side, why rely on external services? Download a model, install the right tool, and bring the power of AI home.

In the next few days we will publish a detailed article explaining how to implement these examples. Meanwhile, we invite you to check different ways to integrate AI to your projects:

Some sources to keep learning about LLMs

- DeepSeek:

- Official Website: https://www.deepseek.com

- Tiger Brokers Adopts DeepSeek Model: https://www.reuters.com/technology/artificial-intelligence/tiger-brokers-adopts-deepseek-model-chinese-brokerages-funds-rush-embrace-ai-2025-02-18/

- DeepSeek’s Impact on AI Control: https://www.sfchronicle.com/opinion/openforum/article/deepseek-ai-technology-chatgpt-20152456.php

- DeepSeek Disrupting the AI Sector: https://www.reuters.com/technology/artificial-intelligence/what-is-deepseek-why-is-it-disrupting-ai-sector-2025-01-27/

- Llama (Llama 3.1, Llama 3.2):

- Meta’s Llama 3.1 Announcement: https://ai.meta.com/blog/meta-llama-3-1/

- Llama Model Downloads: https://www.llama.com/llama-downloads/

- Llama 3 Models Technical Paper: https://arxiv.org/abs/2407.21783

- Mistral:

- Official Website: https://mistral.ai/en

- Gemma:

- Model Details on Hugging Face: https://huggingface.co/google/gemma-2-9b-it

Pablo Derendinger

https://www.e-verse.comI'm an Architect who decided to make his life easier by coding. Curious by nature, I approach challenges armed with lateral thinking and a few humble programming skills. Love to work with passioned people and push the boundaries of the industry. Bring me your problems/Impossible is possible, but it takes more time.